|

|

12 months ago | |

|---|---|---|

| browser | 4 years ago | |

| readme | 4 years ago | |

| .gitignore | 4 years ago | |

| ReadMe.md | 4 years ago | |

| TODO.txt | 12 months ago | |

| go.mod | 4 years ago | |

| go.sum | 4 years ago | |

| index.html | 4 years ago | |

| index.js | 12 months ago | |

| main.go | 4 years ago | |

| main2.go | 4 years ago | |

| nat_upnp.go | 4 years ago | |

| serviceworker.js | 4 years ago | |

| webrtc.go | 4 years ago | |

| webserver.go | 12 months ago | |

| websocket_broker.go | 4 years ago | |

ReadMe.md

EDIT: Just to be clear, everything in this readme is currently an idea, the software to do this doesn't exist yet. I may never write it. But I just really wanted to write down the idea because I thought it was cool.

🍠Tuber

Serve Your Media Without Limits From a "Potato" Computer Hosted in Mom's Basement:

Take our Beloved "Series of Tubes" to Full Power

Tubers are enlarged structures used as storage organs for nutrients [...] used for regrowth energy during the next season, and as a means of asexual reproduction. Wikipedia

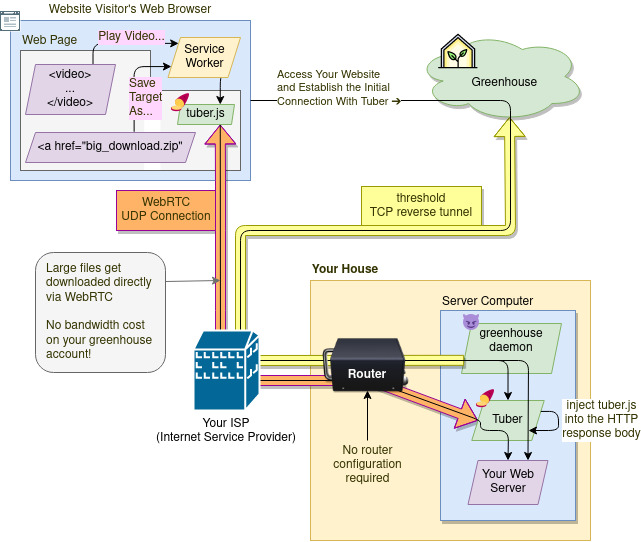

Tuber is a stand-alone HTTP "middleware" or "reverse-proxy" server designed to enable a P2P CDN (peer to peer content delivery network) on top of ANY* existing web server or web application, without requiring modifications to the application itself.

Tuber's client-side code is a progressive enhancement. It requires JavaScript and WebRTC support in order to "function", however it won't break your site for users who's web browser does not support those features or has those features turned off.

* Tuber uses a ServiceWorker to make this possible, so it may not work properly on advanced web applications which already register a ServiceWorker.

Use Cases

1. NAT Punchthrough for Tunneled Self-hosted Servers

aka: "how 2 run home server without router login n without payin' $$$ for VPN"

The original post where I described this idea was published in 2017: https://sequentialread.com/serviceworker-webrtc-the-p2p-web-solution-i-have-been-looking-for

The idea was to utilize a cloud-based service running something like threshold for initial connectivity, but then bootstrap a WebRTC connection and serve the content directly from the self-hosted server, thus sidestepping the bandwidth costs associated with greenhouse or any other "cloud" service.

This way, one could easily self-host a server with Greenhouse and even serve gigabytes worth of files to 100s or people without incurring a hefty Greenhouse bill at the end of the month.

2. Bandwidth Scalability for Selfhosted Media (Especially Live Streams)

aka: "how 2 self-host twitch.tv on potato internet"

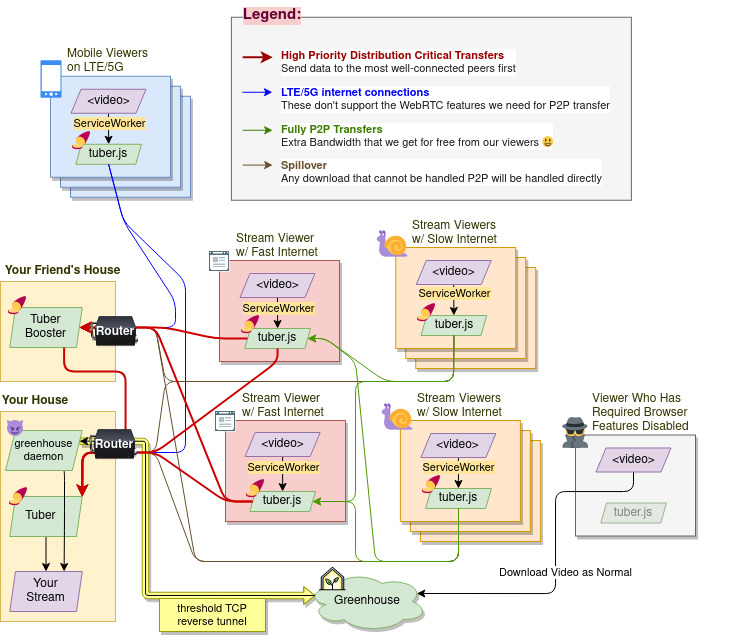

The Novage/p2p-media-loader and WebTorrent projects got me excited about P2P content distribution, where the origin server may not even need to handle all the content requests from all the web surfers any more.

If a large portion of your website's bandwidth is related to the same file (or set of files), and multiple website visitors are typically accessing that content at the same time, then Tuber should be able to expand your ability to distribute content well beyond your home server's upload speed limit.

Live video streaming is a perfect example of this. Live video is usually delivered to web browsers in chunks via formats like HLS. When you're streaming, every time your streaming PC produces the next chunk of video, each viewer will need a copy of it ASAP. If your stream gets popular, then trying to upload all those video chunks to each viewer individually will saturate your internet connection and eventually your viewers will see the stream start cutting out or go down entirely.

But with Tuber, each viewer can also help upload video chunks to other viewers, similar to how BitTorrent works. Technically tuber's protocol has more in common with scalable distributed streaming ala Apache Kafka, but the end result should be the same: the video chunks propagate outwards from the publisher, and as soon as a viewer gets one small piece of the file, they can start forwarding that small piece to everyone else who needs it. If your viewers' average upload speed is significantly faster than your stream's bit rate, you may never have to pay for any additional servers as your viewer count grows.

Tuber Implementations:

-

Permanent/App

- Written in Golang, Pion WebRTC

-

Ephemeral/Web

- Written in TypeScript, Web Browser Only

Tuber Operation Modes:

-

Central Planner

- Maintain Content and Peer Database

- Assign Peer to Peer connection candidates to Peers

- Assign content partitions to Peers

- Give upload/download priority instructions to peers

- Collate and Compare metrics

- rank peers by longevity and upload bandwidth

- detect and mitigate lying peers?

- Maintain Content and Peer Database

-

Content Origin

- HTTP reverse proxy server

- inject serviceWorker into the page

- track content that should be p2p-distributed

- "which content will be requested next?" prediction/mapping per page..?

- auto-detect or configure for HLS or other "stream-like" behavior

- webhooks for adding new content

- HTTP reverse proxy server

-

Globally Dialable Gateway

- STUN server..?

- If it's also a Content Peer, maybe it can serve files directly over HTTP?

-

Content Peer

- Can be registered as "Trusted" with Central Planner

- Partition / File Store

- WebRTC DataChannel connections to other peers.

-

ServiceWorker

- Intercepts HTTP requests from the web browser

- Can handle request via direct passthrough to Content Origin

- Can handle request via Tuber protocol (through connections to peers)

- Can handle request via HTTP request to Globally Dialable Gateway?

- Intercepts HTTP requests from the web browser

Tuber Architechture for Scalability

Tuber is like "State Communism": A central planner orchestrates / enforces transfer of data according to:

"from each according to their means, to each according to their needs."

https://github.com/owncast/owncast/issues/112#issuecomment-1007597971

Notes

https://github.com/onedss/webrtc-demo

https://github.com/gortc/gortcd

https://github.com/coturn/coturn

// this allows us to see if the web page is served via http2 or not:

// window.performance.getEntriesByType('navigation')[0].nextHopProtocol

// but unfortunately caniuse says its at 70% globally.. its not in safari at all, as usual.

// at least in firefox, for me, with greenhouse caddy server, this web socket is using http1

// so it actually makes another socket instead of using the existing one. (this is bad for greenhouse.)

// ideally in the future we could use http w/ Server Sent Events (SSE) for signalling under HTTP2,

// and WebSockets for signalling under HTTP1.1

https://stackoverflow.com/questions/35529455/keeping-webrtc-streams-connections-between-webpages

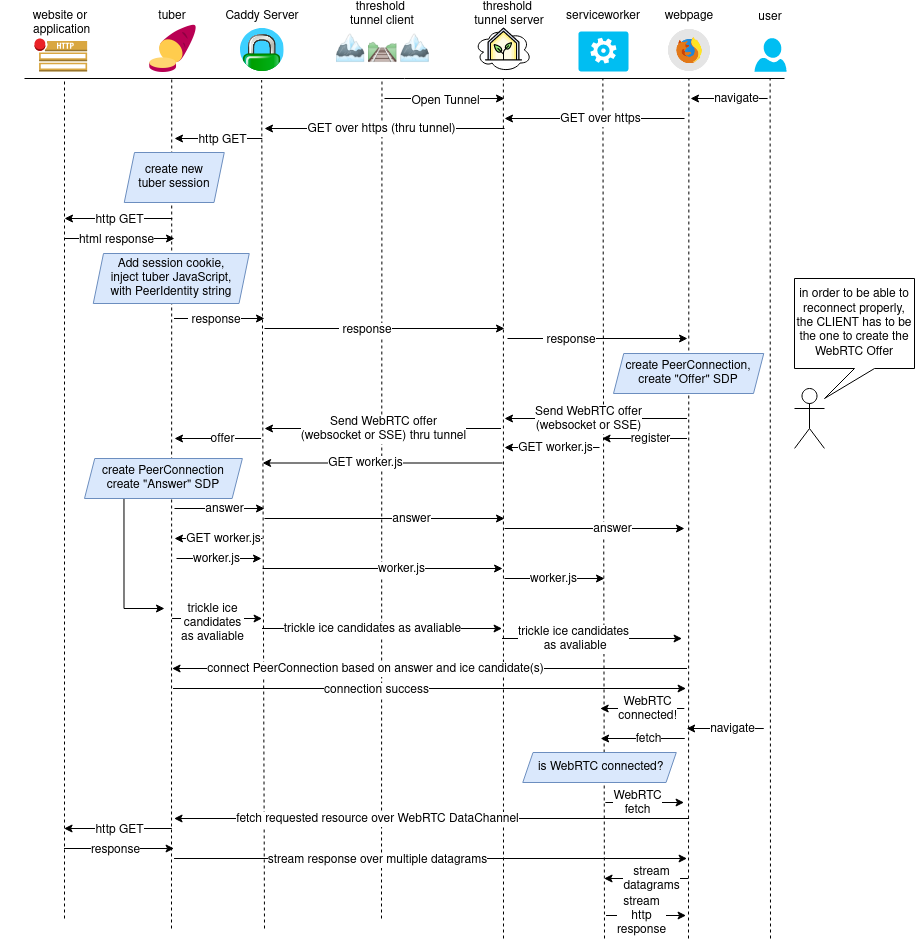

There is the IceRestart constraint which might help. Apparently you can save the SDP info to local storage, reuse the negotiated SDP, then call an IceRestart to quickly reconnect.

As described in section 3, the nominated ICE candidate pair is exchanged during an SDP offer/answer procedure, which is maintained by the JavaScript. The JavaScript can save the SDP information on the application server or in browser local storage. When a page reload has happened, a new JavaScript will be reloaded, which will create a new PeerConnection and retrieve the saved SDP information including the previous nominated candidate pair. Then the JavaScript can request the previous resource by sending a setLocalDescription(), which includes the saved SDP information. Instead of restart an ICE procedure without additional action hints, the new JavaScript SHALL send an updateIce() which indicates that it has happended because of a page reload. If the ICE agent then can allocate the previous resource for the new JavaScript, it will use the previous nominated candidate pair for the first connectivity check, and if it succeeds the ICE agent will keep it marked as selected. The ICE agent can now send media using this candidate pair, even if it is running in Regular Nomination mode.

Filip Weiss 11:46 AM hi! noob question: what is the proper way to reconnect a browser client? like is there a prefered way, (like saving the remote descriptor or something) or should i just do the handshake again David Zhao 2:16 PM @Filip Weiss you'd want to perform an ICE restart from the side that is creating the offer. Here's docs on initiating this on the browser side, and here's initiating from pion.

Bang He 9 days ago how to set the timeout for waiting <-gatherComplete? Filip Weiss 8 days ago thanks. so is it correct that i have to do signaling again when the browser wants to reconnect? Juliusz Chroboczek 8 days ago @Bang He You don't need to use GatheringPromise if you're doing Trickle ICE, which is recommended. If you're not doing Trickle ICE, you need to wait until GatheringPromise has triggered and then generate a new SDP. You don't need a timeout, there should be enough timeouts in the Pion code already. forest johnson🚲 7 days ago @David Zhao

want to perform an ICE restart from the side that is creating the offer. What happens if the answering side performs the ICE restart ? it wont work? I guess I can just try it 😄 David Zhao 7 days ago I don't think you can. it needs to be initiated in the offer. David Zhao 7 days ago see: https://tools.ietf.org/id/draft-ietf-mmusic-ice-sip-sdp-39.html#rfc.section.4.4.1.1.1 tools.ietf.orgtools.ietf.org Session Description Protocol (SDP) Offer/Answer procedures for Interactive Connectivity Establishment (ICE) This document describes Session Description Protocol (SDP) Offer/Answer procedures for carrying out Interactive Connectivity Establishment (ICE) between the agents. This document obsoletes RFC 5245. forest johnson🚲 7 days ago awesome thank you

datachannel message size limits

https://lgrahl.de/articles/demystifying-webrtc-dc-size-limit.html

https://viblast.com/blog/2015/2/5/webrtc-data-channel-message-size/

Single port mode on pion WebRTC

https://github.com/pion/webrtc/issues/639 https://github.com/sahdman/ice

https://github.com/pion/webrtc/discussions/1787

https://github.com/pion/webrtc/blob/master/examples/ice-single-port/main.go

Make sure to send offer/answer before ice candidates:

John Selbie May 15th In the trickle-ice example, does a race condition exist such that the callback for OnIceCandidate might send a Candidate message back before the initial answer gets sent? Or do the webrtc stacks on the browser allow for peerConnection.addIceCandidate being invoked before peerConnection.setRemoteDescription and do the right thing?

Sean DuBois 8 days ago @John Selbie Yea, it's a sharp case of the WebRTC API in a multi threaded environment Sean DuBois 8 days ago https://github.com/w3c/webrtc-pc/issues/2519